The Grad School Admissions Statistics We Never Had

Follow @debarghya_dasThe entire dataset and the very hacky code used to produce these plots are open-sourced on Github

I obsess over numbers. When I applied to college, knowing my SAT score was “good enough” wasn’t enough. I didn’t care that univerities said their admissions “took into account many other factors”; I wanted to know precisely how many people had that score or more and got into the exact school and the exact program I wanted to get into. It feels good to quantify.

It’s been four years since my undergraduate admission days, and I really looked forward to Graduate school applications. It seemed, from folklore, notably devoid of the many complex quirks of its undergraduate predecessor - athlete recruiting, affirmative action, alumni reservations, racial quotas and more. It seemed like a much purer meritocracy, and I expected that meritocracy to be reflected in the admissions data. Unfortunately, none existed.

The Current State of Graduate School Admissions Data

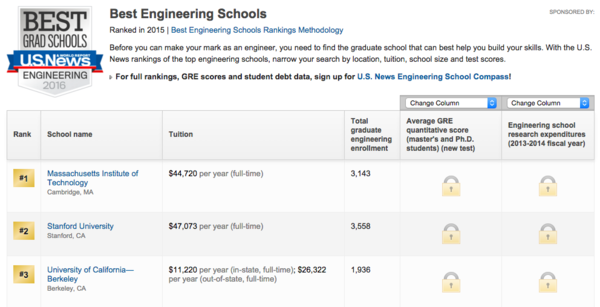

GRE data by university is paywalled on US News & World Report, the premier college ranking organization. Acceptance rates are available from unverified one-offs such as this one on Quora. Verified college admissions statistics are few and far between and much more uncomprehensive than their undergraduate counterparts, such as this one for Stanford’s MS program. Magoosh, a GRE prep company, probably drove a lot of traffic to their site by publishing the first GRE statistics by college and major. Unfortunately, these are (as they admit) mere estimations, with a fairly hand-wavy methodology:

Using the limited score data in the US News & World Report’s release on graduate schools (for engineering and education), I created a block scale that assumes a standard difference between the ETS’s average of intended applicants of a specific major and the rank block (ie Ranks 1-10, 11-50, 51-100). Next I added the expected difference to the average score of the intended major and spread 2 points on either side of that to create a nice range.

– Magoosh

There are only a handful of good ways to figure out what the best graduate schools for you are. Ideally, one would actually know precise details about their intended area of research and the institutions to which established professors in that field belong. The only way to get an idea of graduate school quality online are from one of many ranked lists, with contrasting abstruse methodologies.

Fortunately, we could do a little something to help alleviate this problem.

Contents

- The Bird’s Eye: A Third of a Million Students

- A Shallow Dive: Playing with Numbers

- Your GRE Score Isn’t As Good As You Thought

- Getting Into Grad School May Just Be a Numbers Game

- Are International Students Held To Different Standards?

- A Shamelessly Formulaic College Ranking

- Which Majors Are The Smartest?

- Concluding Thoughts

The Bird’s Eye: A Third of a Million Students

GradCafe is a forum that allows prospective graduate students to submit their graduate school admissions decisions and details about their scores. It has been running since early 2006 (nearly 10 years now) and since early 2010 has been accepting structured GRE and GPA data . It has 372 thousand entries, and we managed to scrape 93% of the data. Although similar things have been tried before, such volume was never reached. A cursory look online reveals a few:

- BrewData, an R package by Nathan Welch, can be used to download cuts of GradCafe data.

- Scraped Admissions Data scrapes the data and generates several acceptance rate plots.

- Minimally Sufficient, a blog, writes about some experiments using BrewData.

- An Analysis of Physics Graduate Admissions data, an academic paper by Jan Mikkenje which uses GradCafe Physics admissions data.

One of the issues with this data is that some of the fields are user supplied, like university name and program applied for. There is misspelt data, names with abbreviations in parenentheses and some without, university names with the “University” missing, capitalization issues, additional punctuation, over-specification (the internal college in the university), ambiguity (USC is both University Of Southern California and University Of South Carolina) and dozens of other inconsistencies we need to account for. Without effectively deduplicating different spelling representations, our analysis becomes far more accurate. Fortunately, we managed to both algorithmically and manually ensure 98% cleanliness on the university name field. We haven’t yet cleaned the program field for all our data, only specifically for the Computer Science fraction.

While a closer look of the exact schema is available here, the basic information this dataset gives us is:

- University Name The name of the university applied to.

- Major The name of the subject applied for, or the program applied to.

- Degree While notably lacking some options, the degree sought to be obtained - typically MS or PhD.

- Season The first character is F or S, denoting the Fall or Spring of the year intended to start the program and the last two characters represent the year. For example, F14 is Fall 2014.

- Decision The decision the university made - Accepted, Rejected, Wait listed, Interview, or Other.

- Decision Method One of - email, website, post, phone or other.

- Decision Date The date the decision was made.

- Undergraduate GPA An optional field to state your undergraduate GPA. Some users state it on 4.0, some on 4.3 and some on 10.0, so you need to be wary before using it.

- Is New GRE Thankfully the old GRE and new GRE scores are easily identifiably by a simple regular expressions. Since there is no guideline when students report the data, we create this field to make it easier to process. About 25% of reports have valid GRE scores, 70% of which are the new GRE.

- GRE Verbal A score, either between 130 and 170 (for the new GRE) or 200 and 800 (for the old one) on the Graduate Record Examination Verbal section.

- GRE Quant A score, either between 130 and 170 (for the new GRE) or 200 and 800 (for the old one) on the Graduate Record Examination Quant or Math section.

- GRE Writing A score, between 0 and 6 at intervals of 0.5 on the GRE Analytical Writing section.

- GRE Subject A score, between 200 and 1000 on the GRE Subject Test. This column isn’t particularly useful because there’s no way of telling what subject the test is in. You can at best guess, based on the reported major.

- Status The background of the applicant - American, International, International with US degree or Other

- Post date The date this decision was reported.

- Comments Additional comments. Previously, this field would be used to report more GRE and GPA info, and about 1000 or so can be recovered with some smart regex matching.

The sheer detail makes this data particularly empowering, and lets us answer a plethora of questions. Splitting the data in one of many ways can help us gain valuable insight in admissions that may have previously only been a latent bias amongst professors, or perhaps unveil hidden secrets to a magic “admission formula”.

Currently, it’s widely acknowledged (in different proportions depending on who you ask) that the most critical factors to get into grad school, particularly PhD programs, are:

- Research Experience Professors love to see research experience, especially with colleagues they are acquainted with and in the field of the candidate’s interest. Published papers at important conferences and being a first or second author on an academic publication adds tremendous value.

- Recommendations Widely regarded as the most important factor in admissions, good recommendations, especially from Professors who are highly regarded or well known in their field, is instrumental to admission.

- Undergraduate GPA Many PhD programs have strict GPA cutoffs of 3.5 or 3.6. Admissions likes to not only see a strong GPA, but advanced graduate level classes that show your expertise.

- GRE While GREs are typically dismissed as merely a sanity check or a screening filter that may raise a red flag or two, we investigate how true this is later in the article.

- Repute of your undergraduate institution While many like to downplay this factor, it anecdotally seems to make a difference. Perhaps this is because it correlates with not only how smart you may have been to be admitted there, but the strength of the curriculum, professors more likely to be known at other prestigious universities and a better calibration of your GPA and coursework.

While it feels untoward to complain about such quality data, having several more fields, particularly undergraduate institution name, and some measure of whether the candidate had research experience and published papers may have made it possible to even train a high-confidence classifier on the data!

A Shallow Dive: Playing with Numbers

Before diving in, it was essential to ensure my exhilaration with this phenomenal chunk of dat was warranted. Like any cynic, I thought, can one really trust self-reported data? I’d imagine not all self-reported data is going to be accurate, but was it reasonable enough to make inferences from?

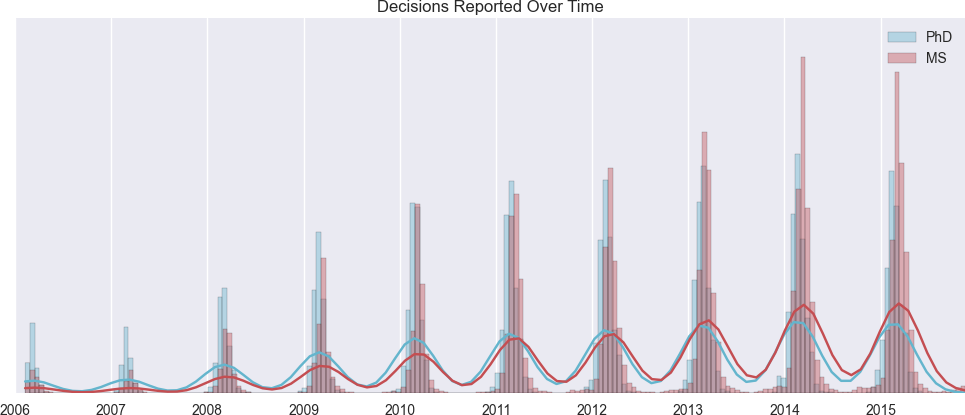

Growth

Let’s first look at the decision trends over time. To visualize this trend, we plot a Kernel Density Estimate plot of the decision dates of different degrees time. The majority of the degrees reported, often as a result of the constraints of Gradcafe itself, were MS and PhD degrees. Manually looking through comments and majors reveals that MA and many other Masters degrees are often misreported as MS degrees. PhDs constituted 63% of about 350,000 results and MS around 35%. To be accurate, we hypothesized that decision dates would peak early on in the year, with Masters decisions being made slightly after PhD decisions. That turned out to be true.

From its humble beginnings in 2006, with 7500 annual decisions, reports grew at an average of 30% every year all the way to 2014. This year, it’s down 3% with a month of limited activity left, a plateauing trend similar to another site.

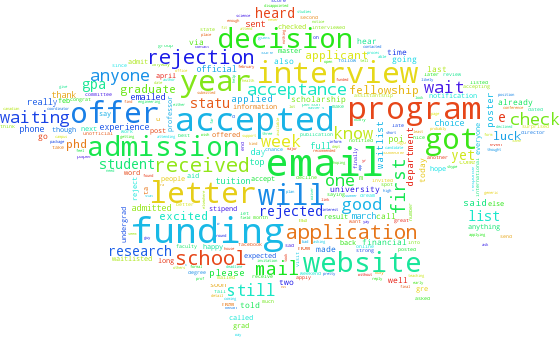

Comments

More than 60% of reportees posted comments along with their decisions. To get a sense of what people talked about, we took 3 million words of comments and made a word cloud. Aside from the obvious, “funding”, “fellowship” seem like expected graduate student concerns.

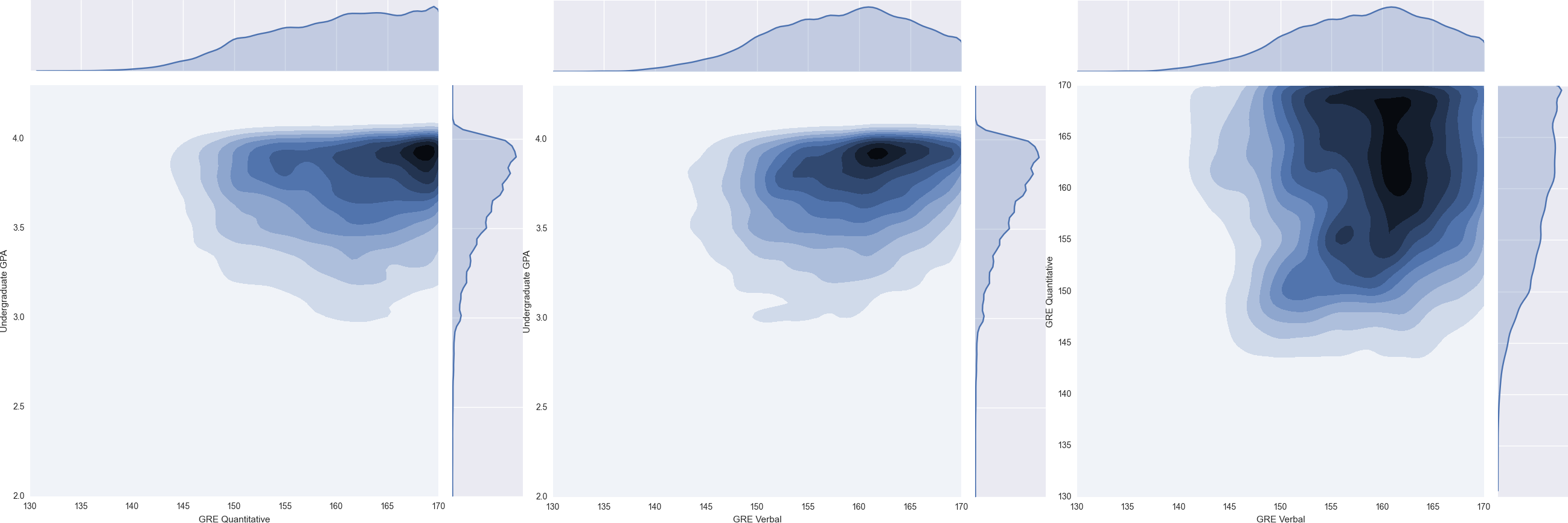

GPA and GRE

There were more than 50 thousand people reporting both their undergraduate GPA and their GRE scores (I only counted the new GRE, graded on 170). Plotting the data revealed fairly expected trends. Quantitative scores seemed to be very high, peaking at the maximum possible score of 170 (which, on the GRE, is no superhuman feat). Verbal scores seems relatively high as well, as did GPAs. Most GPAs hovered in the 3.5 to 4 region - which passes the sanity check. Graduate schools, particularly PhD programs, tend to have a minimum GPA cutoff for applicants usually around the 3.5 area, and most colleges have GPAs in the 0 to 4 range, with few colleges counting A+s as 4.3s, making their theoretical range a 4.3.

#Your GRE Score Isn’t As Good As You Thought

Most graduate schools ask for some form of standardized testing. For medical school, it’s the MCATs. For business school, it’s the GMATs. For most other graduate schools, it’s the GRE. The Graduate Record Examination. It’s never the deciding factor in your graduate school application, but typically rather used to screen applicants on a universal metric. It’s similar to its undergraduate counterpart, the SAT, in many ways. It has three sections - Reading (Verbal), Math (Quantitative) and Writing (Analytical Writing). The Writing section is scored between 0 and 6 in increments of 0.5, and is usually the far less important section. In the new GRE, the other two sections are graded between 130 and 170.

They say

PDFs are where data goes to die.

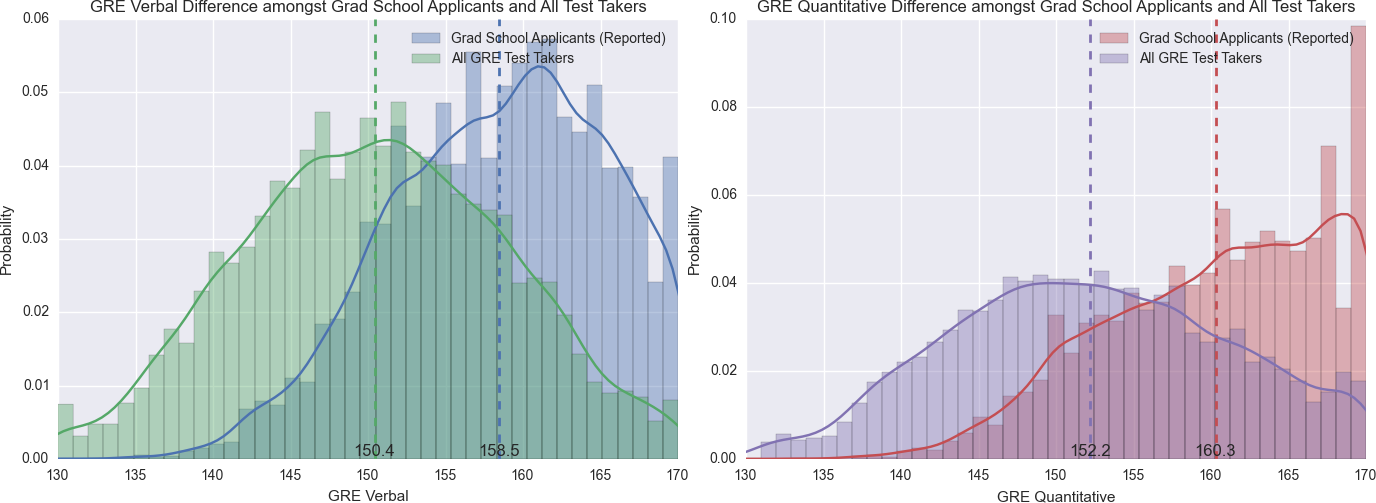

Unsurprisingly, GRE distributions are buried in pdfs. The data reveals that the GRE Verbal average is about 150 and the Quant average about 152, fairly bang in the middle of the 130 - 170 range. As a GRE taker, these numbers seemed fairly low especially for an applicant pool looking to go to graduate school. I couldn’t imagine PhD candidates at even mid-tier schools averaging a 150 in Verbal and Quant. In the data I had, the Verbal average for all grad school applicants was 158.5 and the Quant average was 160.4. For PhD applicants, those numbers jumped to 159.5 and 162. Interestingly, it didn’t move as much when filtered on accepted students, rising to 159.8 and 162.6 respectively.

Before jumping to any conclusions, I should mention that there are a fair number of biases in this data:

- Self-reported candidates are likelier to post when they are Accepted.

- They’re likely to post results for multiple applications.

- More successful candidates are likely to post their personal numbers.

- Ostensibly, the quality of applicants who engage heavily with an online forum are far more serious about their application than the entirety of the test-taking pool, leading to better results/numbers.

Having said that, it’s still unlikely that those biases would account for such a high degree of difference. Later on, we see that acceptance rates computed from our data do not differ too drastically with those reported by the school themselves. Our data further shows that although there is a bias towards better schools in the forums, there are plenty of applicants reporting data to mid-tier univerities as well. Without attempting to estimate the precise contribution of each of these biases, it seems very plausible that typical GRE scores of applicants are significantly higher than that of the entire test taking contingent. To any GRE takers: your score might not be as awesome as the reported percentiles made it out to be!

#Getting Into Grad School May Just Be a Numbers Game

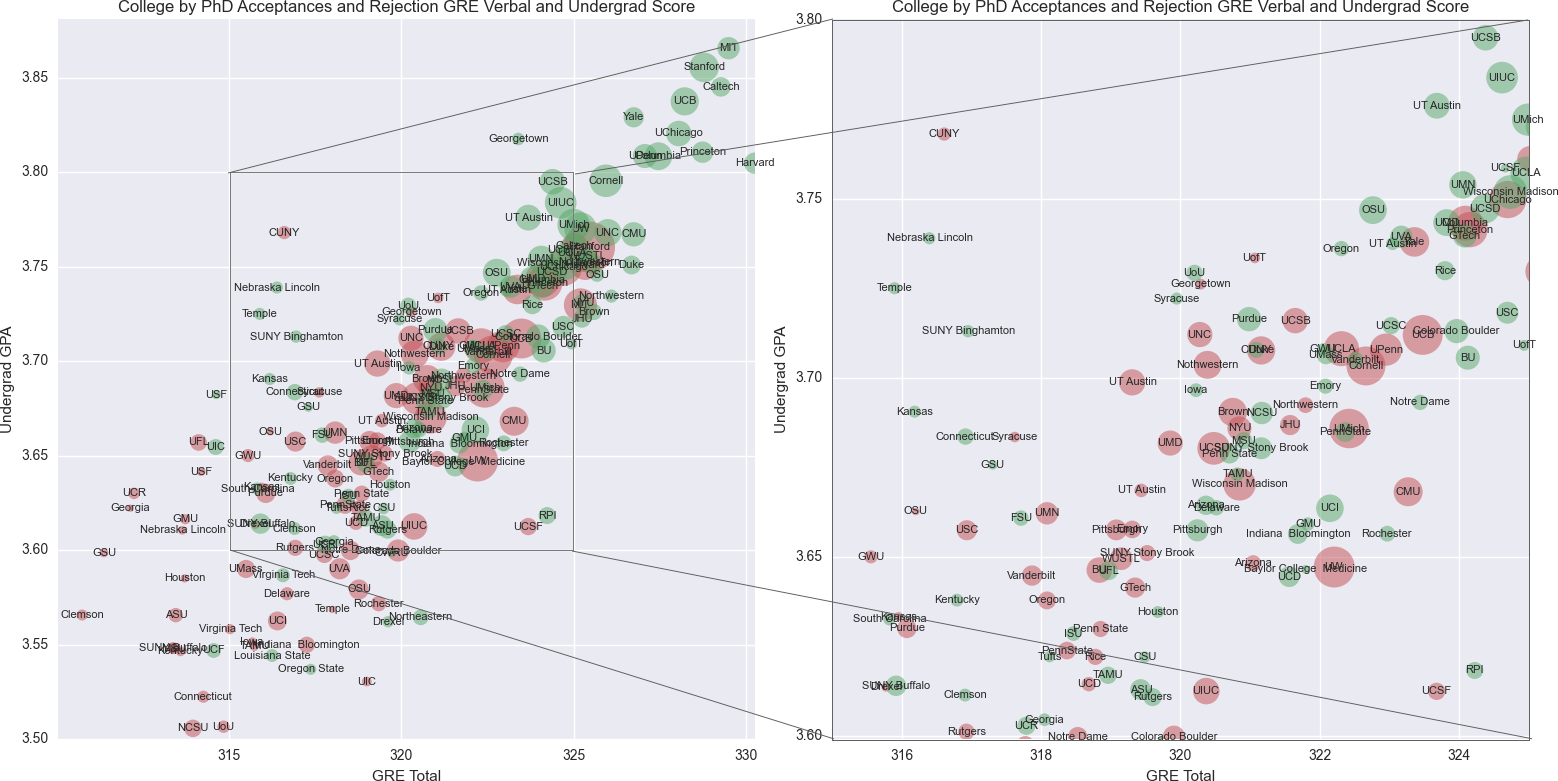

Scientists love beautiful solutions. Wouldn’t it be amazing to discover admissions decisions are highly formula driven? Plug in a GRE score and a GPA and you’re all set. Alright, maybe not amazing for the applicant so much. The question we set forth is - how much do these numbers alone impact your decision? Before we answer that, we need to verify whether accepted candidates for a particular instiution even have higher GPA and GRE scores than the rejected one. This beautiful Hans Rosling-esque animation depicts it.

Clearly, for PhD students, accepted applicants - on average - perform better than rejected applicants on all three fronts - GPA, and both sections of the GRE. Better numbers have had better odds of getting accepted.

Let’s take it a step futher. Could we, with the data that we have, build a classifier that classifies with a higher accuracy than the baseline? The naive baseline accuracy here is max(<acceptance ratio>, 1 - <acceptance ratio>). Simply put - guessing that all applicants would be rejected or all accepted would yield this accuracy. I tried some simple experiments, and found that at best, using numbers to predict admission yielded little to no more success than the naive baseline when it came to established reputed colleges like Stanford and MIT. However, with mid-tier colleges, such as University Of North Carolina, Chapel Hill (UNC) and University Of Virginia (UVA) and many other public colleges, numbers could be used to predict admission decisions at upto a 10-12% higher accuracy than the naive baseline, with an absolute accuracy that would go to the 75-80% accuracy range.

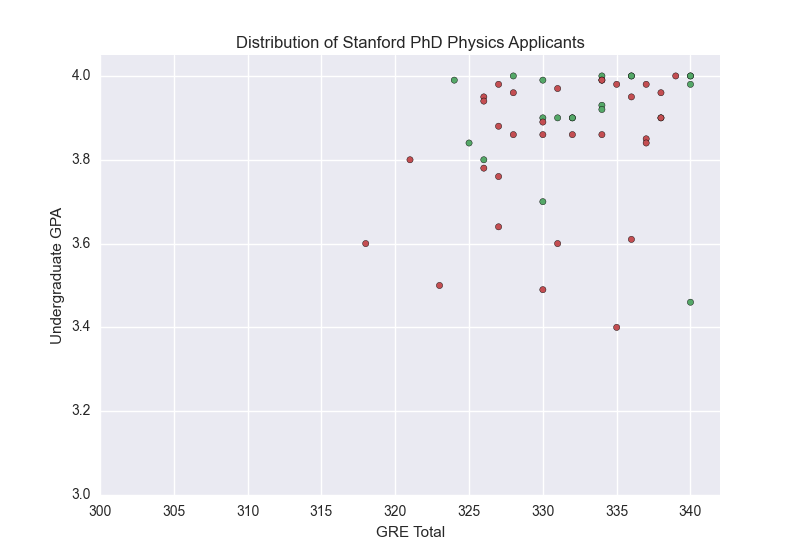

One might argue that trying to classify acceptances for PhDs for a college isn’t fine grained enough, but taking in the major of choice would level the playing field. Unfortunately, the data is sparse at this granularity, but even on observation, no real conclusive evidence can be drawn. Take the following distribution for Stanford PhD applicants in Physics:

This chart shows us that GPA is usually used as a screening bar, as is the GRE score, but even a high GPA and a GRE can only increase your odds, but by no means guarantees an acceptance. Is graduate school merely a numbers game? The data says: numbers matter, but there’s more to it.

#Are International Students Held To Different Standards?

I applied to the US for my undergraduate degree and boy, don’t I know how daunting it can be. It’s difficult enough trying to piece together what a college is like from things you read online, but on top of that you’re trying to figure out how in the world you’re going to shell out a quarter of a million dollars for this. Because of the limited size of the applicant pool in my vicinity, I found myself constantly asking myself what the admission bar really is vis-a-vis my experiences. It frightened me that MIT’s acceptance rate for undergrads was 9.7% for US Citizens and 3.3% for Internationals. With this data, I sought to answer the question: Is it harder for international students to get into grad school?

It turns out that when we compare International student acceptance rates to American student ones for PhD programs for the top 50 schools, the former is on on average 14% higher than the latter (the median is 18.5%). That is, if the average school accepts American students at x%, it would accept Internationals at 1.14x%. The average absolute percentage difference is 6%. Below, I tabulated the ten hardest universities comparitively for international students. AR is the acceptance rate, (A) denotes American applicants and (I) denotes International ones.

| University Name | AR (A) | AR (I) | %age Harder | Applicants (A) | Applicants (I) |

|---|---|---|---|---|---|

| Vanderbilt | 34.6% | 19.6% | 43% | 684 | 301 |

| UC Santa Barbara | 52.6% | 30.2% | 43% | 1129 | 602 |

| UC Berkeley | 33.1% | 19.9% | 40% | 2771 | 1599 |

| GeorgiaTech | 74.3% | 45.9% | 38% | 366 | 628 |

| UFlorida, Gainesville | 66.3% | 43.8% | 34% | 489 | 447 |

| Cornell | 39.9% | 26.5% | 34% | 2060 | 1708 |

| Carnegie Mellon | 40.9% | 27.5% | 33% | 750 | 1117 |

| UC, San Diego | 44.7% | 32.0% | 29% | 1616 | 1098 |

| UCLA | 48.9% | 35.2% | 28% | 2240 | 1272 |

| Johns Hopkins | 41.7% | 30.2% | 28% | 865 | 533 |

Just because international students have a statistically lower acceptance rate, however, doesn’t mean that admissions are necessarily harder for them. It may just be that the bar is the same and fewer people meet that bar. We can only make a conclusive decision by comparing objective measures between the two applicant pools. Let’s look at their numbers.

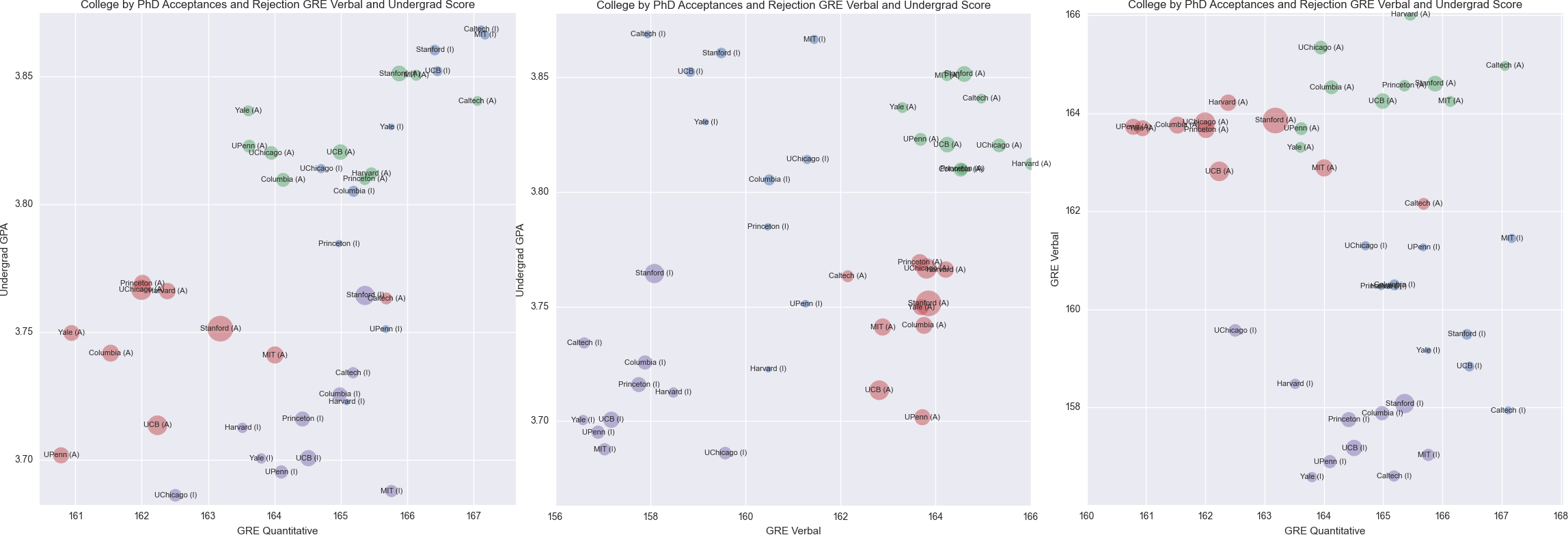

The data looks quite spectacular. When compared objectively, the four applicant pools - American accepts, American rejects, International accepts, and International rejects - occupy their own quadrant when comparing any two metrics. We observe that

- Accepted applicants typically have statistically significantly higher GPAs than their rejected counterparts, and slightly higher GRE Quant and Verbal scores.

- Internationals uniformly seem to have slightly higher GRE Quant scores and much lower GRE Verbal scores than their American counterparts.

- Amongst accepted students, International students still have much lower Verbal scores than their American counterparts. This suggests that they aren’t expected to match American applicants in the English department.

- In the GPA department, International students are by and large held to the same requirements as American ones.

- In the GRE quantiative section, despite accepted International students having higher average scores than their American counterparts, the difference isn’t significant. The bar seems to be the same.

Overall, it’s okay for international students to have lower verbal scores compared to American ones. The important discriminatory factors for acceptance seem to be your Quant score and your undergraduate GPA, and in these regards, International students don’t seem to be treated any differently.

A Shamelessly Formulaic College Ranking

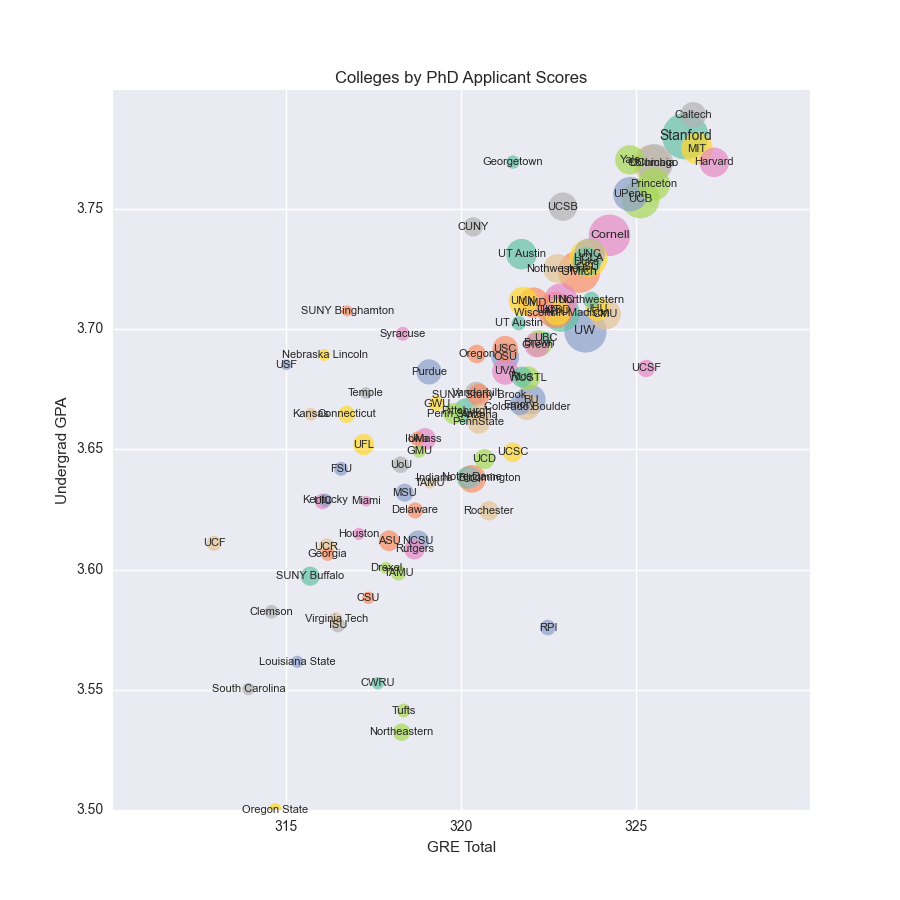

I dislike rankings. Let me preface with that, just in case the title wasn’t blatant enough. I do, however, love data. With all these numbers available, I just had to plug in the numbers to see how far the self-reported data concorded with public knowledge of rankings. In fact, merely looking at the numbers of the applicant pool revealed a familiar ordering of universities:

Methodology

For a more formalized ranking, we took all PhD applicants to all majors and made sure there were at least 30 PhD applicants for each college reporting their GPA and GRE scores. For these colleges, we found the standard deviations for the acceptance rate (negative std for acceptance rate), undergraduate GPA and the GRE, averaged them and sorted. Here are the top 20:

| University | Total | A+R | Accept | Grades | </br> 25% | GPA 50% | </br> 75% | </br> 25% | GREV 50% | </br> 75% | </br> 25% | GREQ 50% | </br> 75% |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MIT | 4140 | 3557 | 26.8% | 457 | 3.81 | 3.92 | 3.99 | 160 | 164 | 167 | 164 | 168 | 170 |

| Stanford | 6666 | 5860 | 24.1% | 1002 | 3.80 | 3.90 | 3.99 | 159 | 164 | 168 | 164 | 168 | 170 |

| Princeton | 4298 | 3887 | 25.2% | 480 | 3.74 | 3.88 | 3.96 | 160 | 164 | 168 | 163 | 167 | 169 |

| Yale | 4351 | 3776 | 24.7% | 390 | 3.72 | 3.90 | 3.97 | 158 | 164 | 167 | 161 | 165 | 169 |

| UC Berkeley | 5875 | 5272 | 27.5% | 692 | 3.80 | 3.90 | 3.96 | 160 | 164 | 168 | 163 | 166 | 169 |

| Harvard | 4431 | 3771 | 28.5% | 406 | 3.72 | 3.85 | 3.95 | 163 | 166 | 168 | 163 | 167 | 170 |

| Caltech | 1732 | 1466 | 34.7% | 313 | 3.79 | 3.90 | 3.97 | 159 | 164 | 168 | 165 | 168 | 170 |

| UChicago | 4787 | 3981 | 30.6% | 627 | 3.73 | 3.87 | 3.96 | 161 | 165 | 168 | 162 | 166 | 170 |

| Penn | 4704 | 3722 | 27.8% | 523 | 3.70 | 3.86 | 3.95 | 159 | 163 | 167 | 161 | 166 | 169 |

| Columbia | 5605 | 4480 | 29.3% | 631 | 3.72 | 3.85 | 3.93 | 159 | 164 | 168 | 162 | 166 | 169 |

| Rockefeller | 146 | 120 | 29.2% | 35 | 3.74 | 3.85 | 3.97 | 155 | 165 | 169 | 161 | 163 | 166 |

| Cornell | 5550 | 4669 | 33.3% | 772 | 3.71 | 3.87 | 3.95 | 158 | 163 | 167 | 161 | 165 | 169 |

| CMU | 2916 | 2388 | 32.3% | 439 | 3.67 | 3.83 | 3.94 | 157 | 161 | 166 | 164 | 168 | 170 |

| Northwestern | 4305 | 3554 | 29.8% | 502 | 3.64 | 3.83 | 3.93 | 159 | 162 | 167 | 161 | 166 | 168 |

| Duke | 3352 | 2679 | 27.4% | 383 | 3.60 | 3.81 | 3.94 | 158 | 162 | 167 | 163 | 166 | 169 |

| Brown | 3080 | 2632 | 23.4% | 311 | 3.60 | 3.75 | 3.91 | 157 | 163 | 166 | 161 | 166 | 168 |

| UCSF | 698 | 501 | 23.2% | 151 | 3.70 | 3.74 | 3.90 | 159 | 165 | 168 | 158 | 163 | 166 |

| WUSTL | 1815 | 1346 | 37.1% | 263 | 3.61 | 3.85 | 3.94 | 160 | 164 | 167 | 160 | 163 | 167 |

| NYU | 3464 | 2580 | 30.7% | 306 | 3.61 | 3.80 | 3.94 | 160 | 163 | 167 | 161 | 164 | 167 |

| UMich | 5595 | 4509 | 35.4% | 826 | 3.68 | 3.83 | 3.94 | 158 | 162 | 166 | 161 | 165 | 168 |

Some of the major takeaways from the college level statistics and rankings are:

- Aside from the usual suspects, Rockefeller and UCSF make it on this list as elite medical institutions from the (elite) cities of New York and San Francisco respectively.

- Most top colleges have median Verbal scores in the 160-165 range and Quant scores in the 165-170 range.

- Most median undergraduate GPAs are in the 3.8 - 3.9 range.

- Compared to official sources from MIT which report an acceptance rate is about 14.2% (3,390 of 23,884) for all graduate programs or Stanford which reports a 16-21% acceptance rate in the last 3 years, these compiled statistics clearly reveal some bias in the data. Acceptances rates here are about 1.5-2x greater than the actual value. This is likely to the several biases we spoke to earlier.

These rankings aren’t very useful given that they aren’t major dependent - splitting it by major makes the data too sparse for most programs. Additionally, they are purely by selectivity criteria - GPA, GRE and acceptance rate - not by the quality of research or any other such qualitative metric.

Which Majors Are The Smartest?

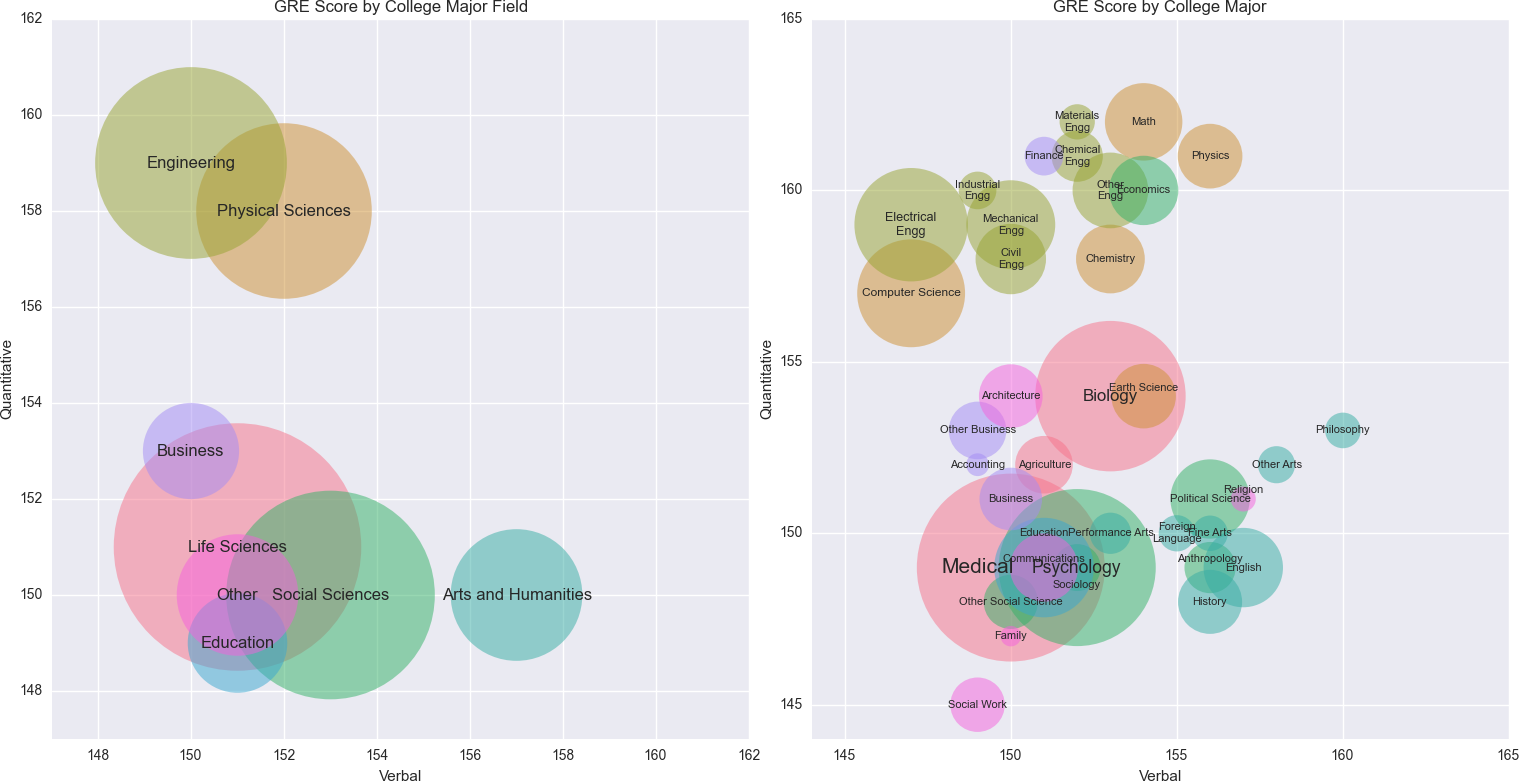

Jonathan Wai, a research scientist at Duke, has said much about this already in a popular Quartz article entitled Your college major is a pretty good indication of how smart you are. In his article, he looks at the GRE, the SAT, and several other data sources to make his claim. Let’s see where this data sits vis-a-vis it.

If we grab data for all GRE test-takers, straight from ETS, buried in this obscure pdf, we already begin to see a picture. Breaking down the data by the seven high-level fields - Engineering*, **Physical Sciences, Business, Life Sciences, Social Sciences, Arts and Humanities, Education, and Other tells a story. We observe three major clusters -

- Good at math, bad at verbal Engineering, Physical Sciences

- Bad at math, good at verbal Arts and Humanities

- Non-verbal non-math related skillsets Business, Life Sciences, Social Sciences, Education, Other

What’s amazing is that, even when we further break this fields into its constituent majors, aside from a couple of exceptions (notably, Business and Economics), most hover around the original position of the parent. There’s some fairly interesting conclusions we can draw from this split

- All Engineers are fairly good at math.

- Finance and Economics are fairly math-heavy majors.

- Math is the best at Quantitative but surprisingly, Philosophy, not English, is the best at Verbal.

- Computer Science is the worst at Verbal and Social Work the worst at Quant, presumably because the study of each involve little development of the respective skill.

- All things taken into account, Physics, Math, Economics and Philosophy majors reign supreme, in that order.

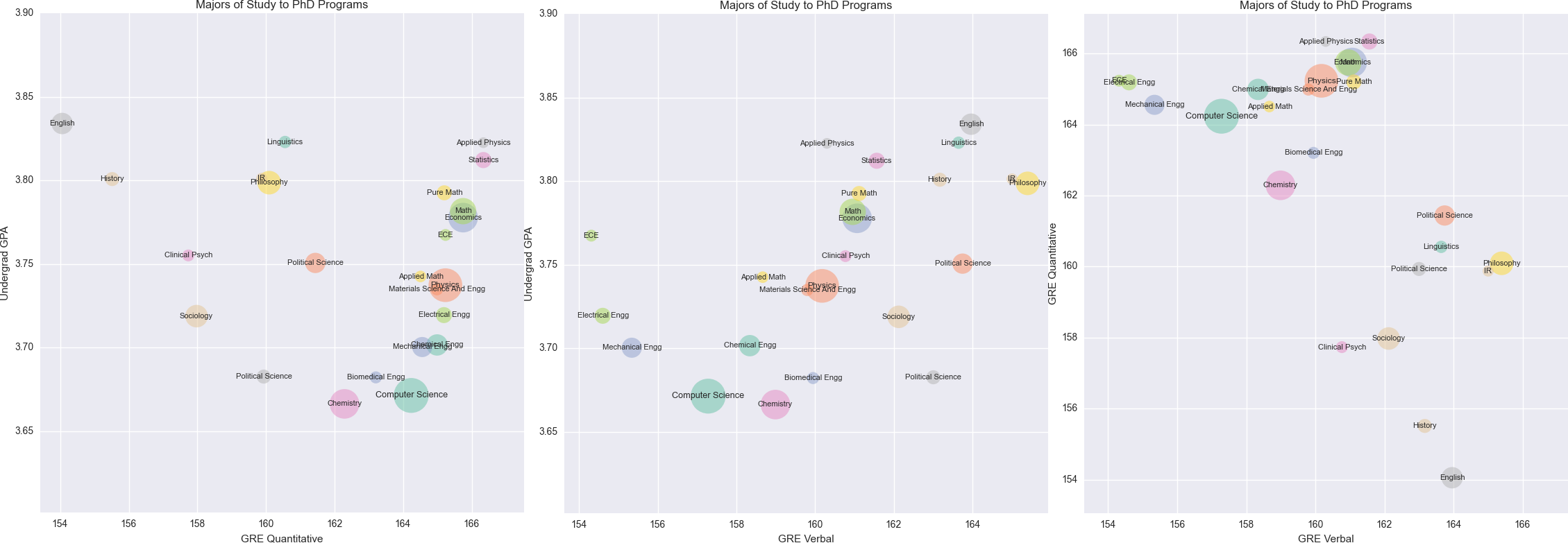

The sheer extent to which our data echoes the previous conclusions is absolutely amazing. It turns out that our data says

- In GRE aggregate, the top majors we see are Statistics, Math, Pure Math (branches of Math), Economics, Physics, Philosophy - the exact same four we identified before. Political Science and International Relations follow closely behind.

- The Non-verbal non-math related skillsets majors we identified previously don’t exist in this dataset. Social Sciences moves to “good at verbal”. Life Sciences probably applies to medical school, and doesn’t take the GRE. We don’t see much of Education in this dataset.

- Computer Science and Electrical Engineering still remain the weakest at Verbal, with the additional entry of Mechanical Engineering.

- English replaces Social Work as the poorest in quantitative skills.

- On the GPA front, a variable we hadn’t witnessed previously, the Arts and pure Sciences average between 3.75 and 3.8 while engineers average between 3.65 and 3.7. This finally gives those college engineers the license to be a tad grumpier about their grades.

- English heads up the pack with the highest GPA while Computer Science and Chemistry trail at the lowest.

If by smartness, we mean possessing strong core skills like quantiative and verbal skills (and not specialized knowledge), it seems that there is overwhelming evidence to support the fact that Physics, Mathematics, Economics and Philosophy majors (who did well enough to try an attempt to attend PhD programs) are the smartest majors.

Concluding Thoughts

Graduate school is the only path to the growth of knowledge of mankind. It’s the channel that begins careers of research and learning. It attracts the brightest minds from all over the world to solve the unsolved problems and discover the undiscovered things. For such an esteemed institution to be so conspicuously devoid of data is startling. This work makes a small step in revealing and analyzing this data. I’ve tried to cover all my bases, but I’m sure there’s plenty more insightful things that can be inferred from the data.

We looked at:

- The first, largest, and most detailed dataset of grad school admissions with 350,000 entries, that is thoroughly cleaned and open.

- Growth, validity, and high level statistics.

- The substantially higher standard of the GRE scores for grad school applicants.

- To what extent graduate school admissions depend purely on the numbers - your GPA and your GRE scores.

- How international students are given more leeway on their verbal score but are still held to largely the same standard as their American counterparts.

- Acceptance rates, percentiles of GPAs and GRE scores for the top 20 colleges, and a purely selectivity and numbers driven ranking.

- The smartest majors by core skills are undeniably Math, Physics, Economics and Philosophy.

Every year, nearly 2 million students apply to graduate school. Perhaps, this will give them a little more to work with than before.

The entire dataset and the very hacky code used to produce these plots are open-sourced on Github

I love hearing feedback! If you don't like something, let me know in the comments and feel free to reach out to me. If you did, you can share it with your followers in one click or follow me on Twitter!